Enterprise Intelligence Quarter

Enterprise Intelligence Quarter (hereinafter, the Quarter) is a lecture introducing the learners to organizational research primarily through key topics related to enterprise intelligence. The Quarter is the second of four lectures of Organizational Quadrivium, which is the last of seven modules of Septem Artes Administrativi (hereinafter, the Course). The Course is designed to introduce the learners to general concepts in business administration, management, and organizational behavior.

Contents

Outline

Bookkeeping Quarter is the predecessor lecture. In the enterprise research series, the previous lecture is Regulatory Сompliance Quarter.

Concepts

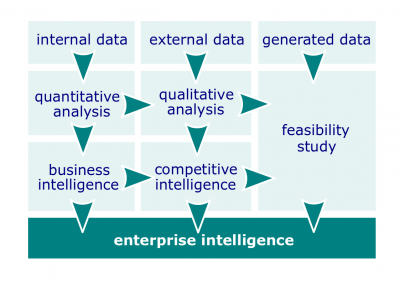

- Enterprise intelligence. Intelligence that is accountable for taking data from all data sources and processing it into useful knowledge in order to identify risks, both business threats and business opportunities and to provide enterprises with actionable insights based on human analysis and data analytics. Enterprise intelligence is accountable for dealing with insider threat, cyber crime, physical crime, and other threats on the one side, as well as business leads and potentials on the other side.

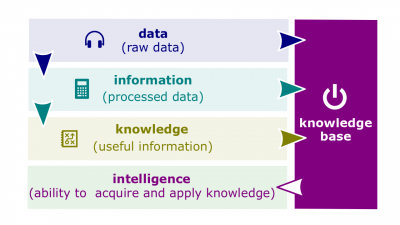

- Intelligence. (1) The ability to acquire and apply knowledge and skills; (2) The collection of information of military, political, or business value.

- Natural language processing (NLP). The ability of computers to understand, or process natural human languages and derive meaning from them. NLP typically involves machine interpretation of text or speech recognition.

- Countersurveillance. Enterprise efforts such as covert surveillance undertaken to prevent surveillance.

- Single source of truth.

- All-source intelligence. (1) Intelligence products and/or organizations and activities that incorporate all sources of information, most frequently including human resources intelligence, imagery intelligence, measurement and signature intelligence, signals intelligence, and open-source data in the production of finished enterprise intelligence; (2) In intelligence collection, a phrase that indicates that in the satisfaction of intelligence requirements, all collection, processing, exploitation, and reporting systems and resources are identified for possible use and those most capable are tasked.

- Human resources intelligence (HUMINT). The intelligence derived from the intelligence collection discipline that uses human beings as both sources and collectors, and where the human being is the primary collection instrument.

- Signals intelligence. The branch of usually military intelligence concerned with the monitoring, interception, and interpretation of radio signals, radar signals, and telemetry.

- Imagery intelligence (IMINT). An intelligence gathering discipline which collects information via satellite and aerial photography. As a means of collecting intelligence, IMINT is a subset of intelligence collection management, which, in turn, is a subset of intelligence cycle management.

- Measurement and signature intelligence (MASINT). A technical branch of intelligence gathering, which serves to detect, track, identify or describe the signatures (distinctive characteristics) of fixed or dynamic target sources.

- Customer intelligence. Searching for customer opinions in the Internet such as in chats, forums, web pages, and blogs where people express freely about their experiences with products, becoming strong opinion formers.

- Intelligence data. Enterprise data used in enterprise intelligence.

- Known known. Any fact or situation which is known or familiar.

- Known unknown (or identified risk). Any risk that is identified.

- Unknown known. Any fact or situation, which is known as existent, but little to no details of it are known.

- Unknown unknown (or unidentified risk). Any risk that is unidentified.

- Objective intelligence assumption.

- Data-collection assumption. Data collection is never completely implemented.

- Data-analysis assumption. If complete data can be collected, it is never completely analyzed.

- Data-synthesis assumption. If complete data analysis can be accomplished, the analysis results are never conclusive.

- Data-presentation assumption. If complete data analysis can be accomplished and the analysis results are conclusive, they are never presented properly to decision makers.

- Data-usage assumption. If complete data analysis can be accomplished, the analysis results are conclusive and properly presented to decision makers. they are never used for decision-making because of internal or external factors.

- Risk analysis. Controlling residual risks, identifying new risks, executing risk response plans, and evaluating their effectiveness throughout an enterprise effort.

- Risk event. A discrete occurrence that may affect the project for better or worse.

- Trigger. Triggers, sometimes called risk symptoms or warning signs, are indications that a risk has occurred or is about to occur. Triggers may be discovered in the risk identification process and watched in the risk monitoring and control process.

- Risk category. A source of potential risk reflecting technical, project management, organizational, or external sources.

- Risk. (1) An uncertain event or condition that, if it occurs, has a positive or negative effect on an enterprise effort; (2) A situation involving exposure to danger.

- Secondary risk. A risk that arises as a direct result of implementing a risk response.

- Residual risk. A risk that remains after risk responses have been implemented.

- Risk culture. The part of organizational culture that accounts for risk appetite, risk tolerance, and risk threshold.

- Risk appetite. The degree of uncertainty an enterprise is willing to take on in anticipation of a reward.

- Risk tolerance. The degree, amount, or volume of the risk that an enterprise will withstand.

- Risk threshold. The level of impact at which one or more stakeholders may have a specific interest. Below the risk threshold, the enterprise will accept the risk, and above the risk threshold, the enterprise will not tolerate the risk.

- Competitive intelligence. The ability to control market research, to identify the data about the competitors, to process the identified data in order to acquire knowledge, and to apply the knowledge towards understanding and anticipating competitors' actions rather than merely react to them.

- Competition. The activity or condition of competing on the market. The buyers may compete over purchases of a market exchangeable; more frequently, the sellers compete over sales of a market exchangeable.

- Competitive analysis. A structured process which captures the key characteristics of an industry to predict the long-term profitability prospects and to determine the practices of the most significant competitors.

- Competitor analysis. Performing an audit or conducting user testing of competing websites and apps; writing a report that summarizes the competitive landscape.

- Business intelligence. Information that managers can use to make more effective strategic decisions.

- Anonymization. The severing of links between people in a database and their records to prevent the discovery of the source of the records, and to maintain privacy and confidentiality.

- Association. A link between two elements or objects in a diagram.

- Systematic study. Looking at relationships, attempting to attribute causes and effects, and drawing conclusions based on scientific evidence.

- Behavioral analytics. Using data about people's behavior to understand intent and predict future actions.

- Data curation. The management of data throughout its lifecycle, from creation and initial storage to the time when it is archived for posterity or becomes obsolete, and is deleted. The main purpose of data curation is to ensure that data is reliably retrievable for future research purposes or reuse.

- Data validation. The process of ensuring that data have undergone data cleansing to ensure they have data quality, that is, that the data is both correct and useful.

- Data gap. Indentification of data gaps in available information in reference to a particular procurement.

- Data manipulation. The action of manipulating data in a skillful manner.

- Filter. A mechanism that includes or excludes specific data from reports based upon what the user decides to filter (e.g., to tightly tailor a report, you might strictly want records of customers between the ages of 25 and 35 who like skiing, but you want to exclude everyone else).

- Big data. The vast amount of quantifiable information that can be analyzed by highly sophisticated data processing.

- Contextual data. A structuring of big data that attaches situational contexts to single elements of big data to enrich them with business meaning (e.g., instead of a customer record that tells you the customer's name and address, data appended to this record data also gives the customer's buying preferences, which is gathered from recent web activity data). The result is a more complete understanding of the customer and her lifestyle.

- Multipolar analytics. A distributed big data model where data is collected, stored, and analyzed in different areas of the company instead of being centrally located and analyzed.

- Data visualization. A method of putting data in a visual or a pictorial context as a way to assist users in better understanding what the data are telling them (e.g., a map is a way to visualize which areas of the country get the most rainfall).

- Data point. An individual item on a graph or a chart.

- Artificial intelligence (AI). A field of computer science dedicated to the study of computer software making intelligent decisions, reasoning, and problem solving.

- Strong AI. An area of AI development that is working toward the goal of making AI systems that are as useful and skilled as the human mind.

- Weak AI. Also known as narrow AI, weak AI refers to a non-sentient computer system that operates within a predetermined range of skills and usually focuses on a singular task or small set of tasks. Most AI in use today is weak AI.

- Algorithm. A formula or set of rules for performing a task. In AI, the algorithm tells the machine how to go about finding answers to a question or solutions to a problem.

- AI planning. A branch of AI dealing with planned sequences or strategies to be performed by an AI-powered machine. Things such as actions to take, variable to account for, and duration of performance are accounted for.

- Autonomous. Autonomy is the ability to act independently of a ruling body. In AI, a machine or vehicle is referred to as autonomous if it doesn't require input from a human operator to function properly.

- Data mining. The process by which patterns are discovered within large sets of data with the goal of extracting useful information from it.

- Heuristics. These are rules drawn from experience used to solve a problem more quickly than traditional problem-solving methods in AI. While faster, a heuristic approach typically is less optimal than the classic methods it replaces.

- Pruning. The use of a search algorithm to cut off undesirable solutions to a problem in an AI system. It reduces the number of decisions that can be made by the AI system.

- Machine learning. A field of AI focused on getting machines to act without being programmed to do so. Machines "learn" from patterns they recognize and adjust their behavior accordingly.

- Artificial neural network (ANN). A learning concept based on the biological neural networks present in the brains of animals. Based on the activity of neurons, ANNs are used to solve tasks that would be too difficult for traditional methods of programming.

- Deep learning. A subset of machine learning that uses specialized algorithms to model and understand complex structures and relationships among data and datasets.

- Backpropagation. Short for "backward propagation of errors," backpropagation is a way of training neural networks based on a known, desired output for specific sample case.

- Weights. The connection strength between units, or nodes, in a neural network. These weights can be adjusted in a process called learning.

- Artificial reasoning. The action of thinking about something in a logical, sensible way used in artificial intelligence.

- Analogical reasoning. Solving problems by using analogies, by comparing to past experiences.

- Case-based reasoning (CBR). An approach to knowledge-based problem solving that uses the solutions of a past, similar problem (case) to solve an existing problem.

- Inductive reasoning. In AI, inductive reasoning uses evidence and data to create statements and rules.

- Artificial chaining. The action of connecting various probable activities in a chain used in artificial intelligence.

- Backward chaining. A method in which machines work backward from the desired goal, or output, to determine if there is any data or evidence to support those goals or outputs.

- Forward chaining. A situation where an AI system must work "forward" from a problem to find a solution. Using a rule-based system, the AI would determine which "if" rules it would apply to the problem.

- Forecasting. A process that uses historical data to predict future outcomes.

- Forecast. Prediction of outcome.

- Qualitative forecasting. Forecasting that uses the judgment and opinions of knowledgeable individuals to predict outcomes.

- Quantitative forecasting. Forecasting that applies a set of mathematical rules to a series of past data to predict outcomes.

Roles

- Intelligence analyst. A professional who gathers, analyzes, or evaluates information from a variety of sources, such as law enforcement databases, surveillance, intelligence networks or geographic information systems. He or she uses intelligence data to anticipate and prevent counterproductive work behavior and other unwelcome activities.

- Business continuity planner. A professional engaged in developing, maintaining, or implementing business continuity and disaster recovery strategies and solutions, including risk assessments, business impact analyses, strategy selection, and documentation of business continuity and disaster recovery procedures. He or she plans, conducts, and debriefs regular mock-disaster exercises to test the adequacy of existing plans and strategies, updating procedures and plans regularly. An incumbent of this role acts as a coordinator for continuity efforts after a disruption event.

- Business intelligence analyst. A professional who produces financial and market intelligence by querying data repositories and generating periodic reports. He or she devises methods for identifying data patterns and trends in available information sources.

Methods

- Risk-response technique. An established procedure for establishing plans of responding to risks if they occur.

- Opportunity-response technique. An established way for developing plans of responding to opportunities if they occur.

- Threat-response technique. An established way for developing plans of responding to threats if they occur.

- Risk acceptance (risk-response avoidance). This technique of the risk response planning process indicates that the project team has decided not to change the project plan to deal with a risk, or is unable to identify any other suitable response strategy.

- Threat prevention (threat avoidance). Risk avoidance is changing the project plan to eliminate the risk or to protect the project objectives from its impact. It is a tool of the risk response planning process.

- Threat mitigation. Risk mitigation seeks to reduce the probability and/or impact of a risk to below an acceptable threshold.

- Risk transference. Risk transference is seeking to shift the impact of a risk to a third party together with ownership of the response.

- Simulation. A simulation uses a project model that translates the uncertainties specified at a detailed level into their potential impact on objectives that are expressed at the level of the total project. Project simulations use computer models and estimates of risk at a detailed level, and are typically performed using the Monte Carlo method.

- Monte Carlo method. A technique that performs a project simulation many times to calculate a distribution of likely results.

- Turing test. A test developed by Alan Turing that tests the ability of a machine to mimic human behavior. The test involves a human evaluator who undertakes natural language conversations with another human and a machine and rates the conversations.

Instruments

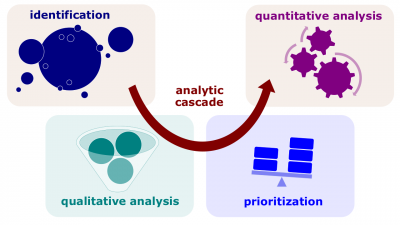

- Analytic cascade. A model used to analyze uncertain factors such as risks, targets, concepts, sources, data, etc. These factors are identified first, qualitatively analyzed second, prioritized third, selected to be further analyzed fourth, and those, that are selected as the most important ones, quantitatively analyzed fifth.

- Uncertain factor identification. Determining which risks might affect the project and documenting their characteristics. Tools used include brainstorming and checklists.

- Qualitative analysis. Performing a qualitative analysis of risks and conditions to prioritize their effects on project objectives. It involves assessing the probability and impact of project risk(s) and using methods such as the probability and impact matrix to classify risks into categories of high, moderate, and low for prioritized risk response planning.

- Quantitative analysis. Measuring the probability and consequences of risks and estimating their implications for project objectives. Risks are characterized by probability distributions of possible outcomes. This process uses quantitative techniques such as simulation and decision tree analysis.

- Uncertain factor prioritization. The process that arranges proposed changes in order of importance relative to each other based on a review of the means available and the impact of trade-offs to attain the objectives.

- Prioritization. The process of determining the relative importance of a set of items in order to determine the order in which they will be addressed.

- Probability and impact matrix. A common way to determine whether a risk is considered low, moderate, or high by combining the two dimensions of a risk, its probability of occurrence, and its impact on objectives if it occurs.

Results

- Risk database. A repository that provides for collection, maintenance, and analysis of data gathered and used in the risk management processes. A lessons-learned program uses a risk database. This is an output of the risk monitoring and control process.

Practices

Organizational Culture Quarter is the successor lecture. In the enterprise envisioning series, the next lecture is Enterprise Architecture Quarter.